Adaptive images: Practices and aesthetics of situative digital imaging

by Kathrin Friedrich, Moritz Queisner, Matthias Bruhn

Situative digital imaging and adaptive images

Digital images are an integral part of everyday routines and workflows – in medical diagnostics, architectural planning, entertainment, or industrial production; they constitute a central interface or point of access that determines specific forms of interaction (e.g. gestures) and knowledge (e.g. in terms of perception or decision-making). The second wave of digitisation, also referred to as ‘industry 4.0’, brings about a paradigm shift that challenges established practices of imaging and image-related action. New sensor and display technologies as well as self-learning algorithms support, extend, and control the relation between humans and computing; body and behaviour are increasingly captured and processed by digital means and correlated with their spatial environment.[1] While the transition from analogue to digital images since the 1970s has changed the modes of operating with images, we are now confronted with a new generation of imaging tools that not only assist human action but guide and even anticipate it. The consequences of such a shift have become most apparent in medical contexts, where imaging technologies intervene with the practice of physicians by supporting diagnosis or guiding surgical interventions[2] as well as in the context of military interventions, where computer-aided and robotic weapon technologies facilitate operational images in the form of visualised sensor data that form the primary, often sole, basis for action and perception.[3]

Moreover, in the everyday realm of personalised marketing and entertainment, for example, and with ever more sophisticated capabilities of photo and video editing, photographic and video-based content has become deeply intertwined with computer-generated imagery. Streaming services, such as Netflix, seek ways to generate ad revenue by embedding computer-generated imagery into their shows and movies. Placing or transforming virtual objects within a video or television transmission has become a new concept in personalised advertising. The difference from existing forms of product placement lies in the ability to automate the process using video analysis to detect objects and areas suitable for the integration of digital images or objects. This is facilitated by deploying artificial neural networks to identify suitable areas for product placement and replacement as well as by rendering virtual content into the video in real time (Fig. 1). In combination with the access to vast amounts of customer information, providers are now able to generate and supply visual content that allegedly suits the viewer’s data profiles (viewing and consumption preferences, age, gender, location, etc.).

Since personalised advertising based on the metrics of the social web accelerated the decline of the classic television era commercial, the subsequent image production has been automated and controlled by software technologies to an extent that affects content creation down to the level of a single frame and up to the viewing experience of the individual user. Brand names can be displayed in unoccupied areas of a news stream, products added to a movie scene, and billboards in a soccer stadium can be superimposed and extended with virtual content in a way that does not obscure players or objects in the foreground (Fig. 2). Sports advertisement adapts to streaming locations, game situations, or even camera angles, and song contest auditions can be personalised right down to the jury’s coffee cups decorated with virtual brands to meet the taste of the viewer’s online-shop orders – all happening in real time. While viewers have become more skilled in identifying product placements or bypassing advertising e.g. by clicking it away, this concept of virtual product placement and replacement implies that our perception of these images is always situatively under someone’s (or most likely an AI) control. In other words, digital images increasingly amalgamate with the situation and context of their presentation. The confocal imagery of photography and video merges with the technology it is controlled and manipulated with, on the basis of metadata.

Fig. 1: Rendering virtual content into a video based on image analysis using artificial neural networks (courtesy of Mirriad Inc., https://youtu.be/npW0OTWOWLE, 2019).

Fig. 2: Virtual billboard advertising in a soccer stadium (courtesy of Supponor Ltd., https://youtu.be/AJtLAYmdgTw, 2018).

Examples in Fig. 1 and 2 are to show how imaging technology produces and actualises content according to spatial and temporal parameters, as well as with regard to social contexts and commercial interest. This linking of image and space in real time is not limited to the boundaries of the image or the screen but extends far into the domain of physical space. With the ability to register and process physical space, to track and trace movement in real time, and to transform it into geometrical shapes, the spatial environment itself becomes computable. Augmented-reality apps like Ikea Place for example allow consumers to capture the size of their apartment and visualise the look and fit of furniture in real time by using the camera of their smartphone or tablet. The app renders 3D-virtual objects compatible to the scale and topography of the user’s environment in real time. By changing the camera’s direction and within the app’s graphical interface, users are able to visualise and ‘move and turn’ virtual furniture in their home (Fig. 3). For doing so, the app’s graphical interface instructs the user to adjust the camera image with physical references such as the floor which will then be automatically detected by the software. On an operational level, such images depend on the user’s position as they continuously adjust the mobile device to their point of view and their movement. Obviously, the app is connected to Ikea’s stock database and provides additional information about the furniture.

Fig. 3: Customers can use the augmented-reality Ikea Place App for testing the look and fit of (virtual) Ikea furniture in the physical space of their living room. (Inter IKEA Systems B.V. https://youtu.be/UudV1VdFtuQ, TC:0:47).

This example of location-dependent digital imaging points to multiple adaptation processes that act together in order to guarantee the functionality of the app, namely to merge virtual objects with the scale and topography of physical space. On a technological level, adaptive imaging combines the process of data visualisation with the registration of the topographical quality of physical space. Based on a spatial reference system that transforms objects and processes into geometrical forms, images can be related to the orientation of a user or a device as well as to a physical object. In combination with increased computing power and ever faster predictability of large amounts of data as well as advances in computer vision and machine learning, it is driving a new generation of digital applications.

While digital images usually separate the object and its representation from each other spatially, applications like Ikea Place seem to merge computer-generated images with the physical world. These practices of situative and context-specific digital imaging in popular media applications are based on visualisation techniques that synchronise image, action, and space. We consider them an emerging new class of visual media which we suggest to describe as adaptive images.

The paper aims to provide a systematic and typological survey of adaptive images as a phenomenon and image-type and names theoretical challenges to encourage further in-depth research. Based on a series of application-related case studies, we identify three essential aspects of adaptive images, namely their particular aesthetic, operational, and spatial dimension. We will conclude by describing respective implications of these characteristics for image and media theory, and we will plead for a theoretical move from the analysis and dichotomy of visual patterns or imaging processes towards a combined analysis of the situation these processes are connected with.

Cases of adaptive imaging

Adaptive images can be found in entertainment, such as in sports broadcasting or film production, in industry 4.0 applications, such as in manufacturing processes, immersive training, or robot-assisted surgery. On a technological level these applications have in common that they incorporate new technical possibilities for real-time image production, processing, and transmission. On a theoretical level their impact and implications for action and perception need yet to be discovered – in particular how they enable or disable certain ways of seeing, acting, and decision-making. With the goal to more precisely describe the phenomenon of adaptivity and to sketch out common characteristics of adaptive images we assemble a series of cases below. The cases are both emblematic of practices and contexts of adaptive imaging and instructive for elaborating theoretical considerations. Together, these study cases ‘represent a problem-event that has animated some kind of judgment’[4] as we assemble them to provide an overview of what we consider to be phenomena and types of adaptive images.

a) Virtual film production: The Volume

Fig. 4: The Volume in use on the set of The Mandalorian (courtesy of The Walt Disney Inc., source: https://dmedmedia.disney.com/disney-plus/disney-gallery-the-mandalorian/technology?image_id=dgtm_ep_4-1_769715f1)

Recent practices of virtual film production rely on a dynamic adaptation of virtual and physical elements within a coherent film aesthetic already during shooting.[5] As special effects (SFX) and computer-generated imagery (CGI) have constantly increased their share in current film and television production, filmmakers are challenged to bridge the divide between what they ‘can see through the camera on the live-action set, and what they have to imagine will be added digitally many months later’.[6] Virtual production closes the imaginative gap between physical and virtual layers of the final film, already during in-person production, through different combinations of game engines, VR and AR, camera tracking and other hardware and software configurations. A specific technological set up that significantly influences film aesthetics was recently introduced by the production company Industrial Light and Magic (ILM). The virtual production methodology referred to as The Volume should circumvent lengthy and costly post-production for the Disney+ series The Mandalorian. A built environment, it incorporates a suite of technologies in which physical and virtual layers of the live-action images dynamically adapt to each other during shooting. Technologically, The Volume consists of a stage surrounded by LED walls which dynamic display digital images depicting large-scale virtual sets (Fig. 4). These virtual sets ‘were drafted by visual effects artists as 3D models in Maya, onto which photographic scans were mapped’.[7] The focus of the visual aesthetics and their effects shifts from post-production to pre-production while simultaneously enhancing the photorealistic experience of (futuristic and remote) settings for filmmakers and finally for viewers.

The virtual production methodology not only requires filmmakers to create virtual spaces, which are visualised on the LED panels, it is also necessary to partly build scenes as a physical stage on which the actors can communicate with each other and the camera. While shooting, the visualisation of the virtual scenes synchronises with the position and perspective of the tracked film camera. ‘When the camera pans along with a character the perspective of the virtual environment (parallax) moves along with it, recreating what it would be like if a camera were moving in that physical space.’[8] The synchronisation of in-camera capturing, on-set actions, and virtual visualisations creates a parallax effect which, in return, affects the aesthetics of the entire film.[9] Without being able to create a coherent but nevertheless dynamic spacetime for physical and virtual entities, the aesthetics of the virtual production methodology would not be perceptually convincing.[10]

b) Closer than life: Augmented sports imaging

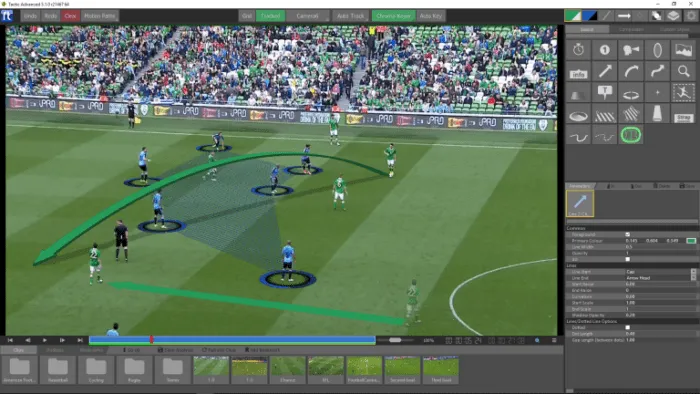

Fig. 5: Presenter interface of the sports analytics software Tactic Advanced (RT Software, 2020).

For more than 100 years sports have been the prototypical example for a fast and direct visual representation and transmission; made possible by powerful optical technologies and in connection with the photo and film industry, press and entertainment, the liveliness of the sports image could thus serve as the perfect representative of the scopic regime of modernity as such. In the digital era, the continuous exchange with the styles of (and expectations set by) computer gaming has continued to turn sports into a collective visual interaction, where real players have to submit to the laws of mass media and sports television that employ the potential of AR and MR applications to enhance and study game situations (Fig. 5). While the dynamics on the field of play represent a trigger for the development and adoption of improved means of video coverage throughout the history of visual media (short-time aperture, portable cameras, film studio equipment), the possibilities of zooming, replay, or slow motion have turned the football pitch and comparable playing fields into quasi-experimental settings for in-vivo observation. In return, the game itself has become a virtual simulation with actors in a professional, cinematic production. Bodies, grounds, and clothes are adapted into a dense multimedia network, with rules modified in order to accelerate action. The amounts of money currently invested in sports and television broadcast of sports events are almost comparable to those in the healthcare or military sector. Beyond the economic aspect, the live coverage of games also shows relations to visualisation practices in the life sciences, e.g. in regard to the problems of motion tracking or representing the constant changes of perspective. Matches are staged as an extremely suggestive 4D sensation, made possible by spider-cameras, high-definition images, and fully computerised live-broadcast editing suites. A focus of intensified research should be this interplay between the contingent forms of motion in sports and the evolution of visual technologies and styles.

c) Virtual therapy: Fusing imaging, sensomotorics, and imagination

Fig. 6: Exemplary setting of virtual therapy for the treatment of fear of heights. Left side: Patient wearing HMD and using VR controller. Right side: Virtual scenario inside HMD depicting a high bridge that the patient virtually walks on (courtesy of Oxford VR)

An example for the seemingly inscrutable and media-induced feedback loop between different agencies of interaction and operativity can be found in the realm of virtual therapy. Generally speaking, virtual therapy (also labelled virtual reality therapy or cybertherapy), employs VR scenarios and head-mounted displays (HMD) for the purpose of exposure therapy.[11] Patients with a fear of heights, for example, are encouraged to engage with scenarios that trigger uncomfortable or frightening emotions (Fig. 6).[12] Such therapy applications promise to reduce patients’ anxieties and stress symptoms through virtual exposure to the feared situation while the patient simultaneously converses with an attending therapist. In comparison to traditional exposure therapy, the use of VR in conjunction with opaque HMDs is key for the therapeutic rationale. Virtual reality visualisation differs fundamentally from 2D and even stereoscopic image formats, as images are responsive to the user’s movement and position. Virtual reality headsets allow users to combine translational movement (moving forward and backward, up and down, left and right) and rotational movement (tilting side to side, forward and backward, left and right) inside a scene. In this way, users become part of the image in which they can experience a scene three-dimensionally, from any perspective and in real scale. In theory, this has long been anticipated, often in an emphatic tone, as the final step ‘from observation to participation’ and ‘from screen to space’. When the body becomes an active part of knowledge acquisition, spatial structures can be experienced on the basis of the connection between sensor and motor activity, which allows for new forms of an embodied access to the visualisation of research data.

In virtual therapy, the patients’ impression of being mentally and physically immersed in the virtual scenarios is particularly fundamental for triggering emotions that can subsequently be processed therapeutically. The situative and operational adaptation of sensomotorics, imaging and imagination is key to evoking this feeling of immersion. For this purpose, virtual scenarios, the sensor technologies of the HMD, and the sensory-motor input of the patient need to be flawlessly synchronised.[13] This interactional feedback loop is itself intended to be operational and to affect a patient’s body and mind in a way that is considered to be therapeutically effective.[14] Critical analysis needs to disentangle the operational fusion of imaging, sensomotorics, and imagination. However, a particular methodological challenge consists in the shifting agencies between the heterogeneous elements in such ensembles. Any attempt to explore the aesthetic interplays of users’ perception and visualisations must also address performative aspects of embodied interaction and virtual scenarios. Furthermore, a critical assessment of VR technologies needs to discuss their overall impact, notably in view of research that has substantiated notorious cognitive, emotive, and social effects of VR applications.[15]

d) Mobile games: Pokémon Go

Fig. 7: Augmented reality game Pokémon Go (Wachiwit, 2017).

Another example for the way in which screen-based visual practices dissolve the distinction between image and space is the location-based augmented reality game Pokémon Go. The application encourages users to explore physical space in order to catch virtual figures displayed within the camera stream of a mobile phone (Fig. 7). By aligning camera image and physical space, Pokémon Go players perform operations both within and beyond the boundaries of the screen: The in-game view layers photographic and animated elements depending on the player’s location and the camera’s field of view. The mechanism of merging image, action, and space transforms viewing into using and emphasises the active role of the image in guiding a user’s action and perception. Again, it shifts the perspective onto the situation rather than the result of an imaging process. This linking of aesthetics and pragmatics opens up new opportunities to revise historical concepts of perspective, perception, and interaction. Accordingly, adaptive images correspond with a type of applied or operational images that not only show but also prompt the viewer to carry out an action. Pokémon Go players are not merely image-literate viewers and analysts, they perform operations through and with images; and those images do not just represent or visualise, they actively affect the user’s position in space as well as their disposition towards the image.

e) Surgical practice: Merging medical imaging and human anatomy

Fig. 8: Augmentation of anatomical structures with pre-operative images (M. Queisner, M. Pogorzhelskyi, C. Remde, 2018).

Fig. 9: Alignment of an ultrasound transducer with the ultrasound image using a transparent HMD (M. Pogorzhelskyi, M. Queisner, 2018).

In medicine, studies of minimally invasive practices have shown that the control of instruments requires a cognitive convergence of the eye and hand, further strengthening the role of visualisation processes as a precondition in surgical interventions (Friedrich & Queisner 2014). Usually, physicians must cognitively ascribe medical image data, for instance a computer tomography, to the patient’s body in order to act appropriately in a particular situation. In order to bridge the gap between the image and the patient’s body, they need to correlate two-dimensional (sectional) images and three-dimensional space. This comparative vision, which refers to a vast tradition of visual competence, not only in surgical practice but also in art history and other disciplines, is an essential skill in image-based workflows.

Visualisation concepts for mixed reality challenges this seeing-strategy: by looking through a transparent head-mounted display, physicians can superimpose a digital layer onto their point of view that annotates, diminishes, or enhances human anatomy with visual information in a joint perceptual space (Fig. 8). The integration of thermographic and electromagnetic as well as X-ray or ultrasound images into the physician’s action and perception reduces the offset between image and body. This dissolution of the separation between image and body has far-reaching implications: the constant spatio-temporal interplay of image and anatomy produces new opportunities for surgical interventions, but also poses challenges in relation to the perception, interpretation, and design of images. Aligning an ultrasound transducer with the ultrasound image, which is usually displayed on a separate screen, facilitates orientation and the localisation of anatomical structures (Fig. 9). But it also impedes the physician’s view on the operating area, because the image size is fixed to the scale of the patient’s body, and the viewing angle needs to adapt to the position of the transducer. In another case, when superimposing the physician’s view with stereoscopic anatomical 3D models, volumes can be perceived in a more realistic way (Fig. 8). On the other hand this results in disadvantages to distinguish the visible surface and the depth information of the anatomical structures.

In surgical practice, adaptive imaging techniques provide alternative views of the body that allow for new forms of interaction with and through images. But this mixed reality also requires one to reconsider the aesthetics of medical visualisations that need to adapt to the patient’s body in real time, regarding visual parameters such as colour, contrast, texture, contour, lighting, or transparency. And it demands a novel visual knowledge and methodology to comply with this visual disposition and an increased awareness concerning the physical consequences of an intervention supported or even directed by digital imaging. The conversion of soft tissue into geometric shapes, furthermore, turns the manipulation of anatomy into an algorithmic problem. Sensor technology here does not just visualise anatomy, it renders the topographical structure of anatomy into a machine-readable form. Cutting tissue for instance is then not based on human vision and interpretation alone but can be executed by robotic systems. Accordingly, decisions and intervention objectives can be anticipated by hardware and by software alike that intervene in the surgical workflow and restrict actions. In this way the diagnostic image is seamlessly absorbed into surgical workflows in the operating theatre to such an extent that the digital image replaces the real body as the primary object of reference.

f) Assembly and processes: Synchronising image and action in industrial applications

Fig. 10 a,b: A case study conducted by Airbus seeks to combine the construction site and instructions into a joint perceptual space by eliminating the offset between image and object (Microsoft Inc., 2019).

In assembly processes, switching back and forth between a screen and the workspace results in disadvantages for hand-eye coordination, as many manufacturing and assembling situations require continuous comparison and coordination between screen-based instructions and the assembly of parts. In the assembly process in the aviation industry, for example, instructional visualisations are usually not adaptive to the user’s position and line of sight. By looking through a transparent head-mounted display, construction workers in one case study, conducted by Airbus, were able to simultaneously see the physical space behind the screen and an on-screen visualisation (Fig. 10). Seen through a transparent head-mounted display, the workplace can be superimposed with images that annotate the user’s vision with visual information that coincides with the scale and position of physical objects. The image adapts to the observer’s point of view in real time. While a juxtaposition of workspace and image on a separate screen would require continuous comparison between image and object, the head-mounted display combines them in a joint perceptual space.

In this way, adaptive imaging transgresses the offset between an instructional image and the assembly site and puts forward a new practice of interacting with spatially related information. This concept can be employed in a variety of industrial applications that require the synchronisation of images and space, for example, in architectural planning, in the automotive industry, or in product design. While a visualisation on-screen is usually not directly related to the spatial context beyond the screen, transparent displays allow users to see and interact simultaneously with a physical object behind the display and a visualisation on-screen. This real-time connection of sensor and motor systems in mixed reality applications proclaims a growing convergence of virtuality and physicality as well as image and object.

Challenges and implications for image and media theory

The cases presented above demonstrate the extent to which digital imagery, combined with sensing, display, and transmission technologies, affect and guide action and perception. Each individual case does not present new empirical findings but together they show a range of practices of digital imaging that can be systematised under the umbrella term of adaptive images. These images synthesise action and space in a way that leads to a new notion of ‘adaptivity’, which clearly differs from existing concepts used e.g. in evolutionary biology, ecology, in sociology or cultural theory. Adaptive properties as such may be nothing new (notably if the term ‘adaptive’ is understood in the broadest possible sense), but digital adaptivity seems recognisably dependent on more recent developments in the field of computing and sensing which impact how users perceive, interact, and decide. Digital adaptivity is primarily directed at the subtle ability of interactive imaging to display real-time information in accordance with spatial properties and to increase the possibilities of feedback, surveillance, and customisation.

Professional fields of application such as image-guided surgery (where interfaces, algorithms, and scripts have begun to anticipate human decisions, and real-time assistance systems replace the material body as the primary object of reference) reveal the extreme consequences that can result from the described assemblage of tools.[16] They show how the concept of adaptation affects not only the image but the object itself; virtualisation turns the digital copy or the digital twin into a new type of ‘original’. It creates an independent reference point that slowly eliminates the difference between representation and the represented and embeds imaging processes deeply into action and perception. This leads to at least three challenges for the image and media theory:

a) It needs to disentangle the different theoretical threads and developments synthesised in adaptive imaging. A terminological effort to define adaptive images does not only require reviewing existing theoretical approaches on digital images from aesthetics, image theory, or visual media but also needs to address the operational and spatial aspects of imaging. Their interrelation within adaptive media requires an interdisciplinary analysis that is both technically informed and takes into account the history of techniques of representation, action theory, technologies of sensing, or design;

b) It needs to address a methodological dilemma: when it comes to investigating imaging processes, the analysis is often narrowed down to the level of representation. However, addressing images in the context of adaptivity is usually based on the analysis of situations which cannot be easily depicted. In addition, adaptive images are only experienced individually, i.e. exclusively from the perspective of their users, and thus impede the observation and documentation of the respective images. Therefore, the challenge for established approaches to image analysis is to develop a method that captures the iterative interplay of structures and processes both in front and behind the image to account for the aesthetic, operational and spatial aspects of adaptive images; and

c) For better understanding potential flaws and threats of a technology, it needs to formulate a research agenda which analyses the social and cultural implications of adaptive imaging with regard to their impact on the production, dissemination and use of digital images. This applies particularly to contexts of application and visual practice where images increasingly function in a recursive feedback loop with perception, interaction, and decision-making and extend their reach beyond the visual domain.

At this point we are not yet able to fully flesh out the demands of the latter two challenges; nevertheless, we would like to outline productive and inspiring points of contact to review and develop existing theoretical approaches for the endeavour of developing a theory of adaptive images. The ‘problem-events’ discussed above theoretically allow us to identify three basic characteristics of adaptive images: the spatial, aesthetic, and operational dimension of adaptivity.

Aesthetic dimension of adaptive images

From an aesthetic standpoint, adaptive imaging changes the modes of creative image-production and imagination by providing instant forms of processing and visualisation. As adaptive images convey their own spatial and operational conditions, they afford both a processual visual knowledge as well as dynamic modes of human-technology interaction. With regard to aesthetics, the central challenge is the constant spatio-temporal adaptation between image and space. So-called ‘augmentation’ reproduces its own language of form, one that must overcome the aesthetic difference between image and space with particular forms of depiction. From the perspective of visual design, the central challenge seems to be the creation of new forms of representation that address the merging of image and space. How will design strategies (re)define visual parameters such as colour, contrast, texture, contour, lighting, or transparency? What kind of interaction and manipulation strategies do adaptive imaging technologies convey in order to cope with the limitation of access to physical space, e.g. the integration of physical objects or multi-user situations?

The history of art and visual representation conveys a permanent evolution of modes and tools of production and observation that are relevant not only in historical terms; they reveal the changing styles of representation (virtual effects, scaling, false colouring, time-based coding, seriality, graphical elements) as well as their cultural effects. The respective field of research ranges from the introduction of linear perspective and projection to other means of illusion and immersion;[17] from photography to motion pictures, particularly in relation to life sciences;[18] from electronic image procession (photo, video) to interactive real-time simulations and gaming;[19] and from pioneering examples of interactive art[20] to contemporary interaction and interface design.[21]

An aesthetic approach to develop a concept of adaptive images calls for a revision of the close relationship between technology and form, which ranges from imaging algorithms to the design of graphical and tactile interfaces. This endeavour demands a conceptual combination and methodological operationalisation of media theory, technology, and design studies as well as image criticism. Through this, it can contribute to a general theory of the image (in terms of pictoriality, iconicity, representation) and also update the concept of ‘visuality’, which moves closer to the concept of ‘vision’ with the coercive nature of modern optics and sensory physiology (in terms of visual culture theory),[22] notably since the potential loss of a distinction between image and reality has been a leitmotif of cultural criticism since the early 20th century and has also accompanied the evolution of electronic media. The criticism of simulation techniques and early forms of immersion, taken as evidence for psycho-physical ‘alienation’, went hand in hand with the promise of total virtualisation in the 1990s, as in the philosophical work of Jean Baudrillard and Vilém Flusser.[23]

Seen from the past viewpoint of avant-garde aesthetics, adaptive imaging again promises to blur boundaries between representation and reality to a degree that the two spheres seem interchangeable in some contexts. Adaptive images are more than translations and output formats of given data, practices, or contexts. Augmentation of the image presented in the perceptual field bridges devices and representational processes. In doing so, augmentation produces its own language of form that blurs the difference between image and represented space. The technological promise of increased proximity to reality or a greater variance of action and manipulation (through real-time interaction, 4D visualisations, audio-visual augmentation) is juxtaposed with the visual limitations of screen-based media to represent space, texture and chroma, transparency and opacity, and with or the individual capacity to perceive, interpret, and reflect the different types of information. Hence, adaptation should not be merely understood as a ‘perfect match’ between a given reality and its electronic representation, in terms of a convincing optical simulation; it also implies the criteria of responsiveness, individual usability, and perception.

Operational dimension of adaptive images

With image production, processing, and transmission all possible in real time, images are increasingly being integrated and embedded into visual practice – viewing images has predominantly turned into using images.[24] Interacting with visual devices and interfaces, such as virtual reality headsets, augmented reality apps, or navigation systems, situates users in both virtual and physical space.[25] Hence, this type of image entails a certain agency within and beyond the screen which exceeds the range of human actions.[26] Images themselves become operational as they interact with objects and space by their own means and in a responsive feedback loop. The examples discussed above document such situative feedback loops of visual representation, corporeal experience, and operational impact that results in a new type of images, perceived as a dynamic and autonomous form, that change through their application and seem to become independent in the course of application.

For the endeavour of developing a theory of adaptive imaging this implies to acknowledge the very agency of media and, in particular, the agency of images in order to understand them in operational rather than representational terms. Therefore, an analysis of adaptive images implies to conceptualise images particularly in the context of operation, e.g. when using a virtual reality headset, as well as questioning how imaging processes themselves become operational, e.g. by guiding a surgical intervention in clinical medicine. Studies of the concept or cases of operational images often draw on what the filmmaker Harun Farocki tellingly summed up in his observation of images used to control rockets as they ‘do not represent an object, but rather are part of an operation’.[27] As Farocki observes, computers refer to data instead of images as the basis for decisions, making the need for a form-based and application-related critique of the image all the more pressing. Based on this observation, the conditions of acting with and through digital media as well as the material transformations they engender have been examined in various application contexts.[28] Such studies provide productive starting points to analyse the operational dimension of adaptive images.

Spatial dimension of adaptive images

Digital images increasingly depend on the context and the situation in which they are used. As adaptive visualisations, they also and increasingly determine the ways people interact with their physical environment. Mobile and location-based computing already integrates the aspect of place into the use of digital media, for instance when the map view on a smartphone indicates the user’s position based on localisation technologies, such as satellite or network information. In addition, recent types of computers and sensors (e.g. in smartphones or virtual reality headsets) do not only register, process, and transmit location-based information but also recognise topological information: they make analogue space computable by ‘understanding’ the spatial environment. This shift towards the spatiality of imaging processes has become possible only due to real-time image processing and the use of sensor technologies to register the quality of space. Today, the technologies of embodied and spatial computing link and augment the physical space – and not only a geolocation – with digital data. This has made interactive 3D simulations such as virtual reality applications possible, which require dynamic, responsive, and situated images that continuously adapt to physical space and location.

While the electronic and digital image was initially taken as a symbol for the loss of reference to place, object, and matter and thus a fundamental technological and epistemological change,[29] its connection to sensor and motor systems now rather supports the convergence of the virtual and the physical. This reference to space ranges from works on early portable display media[30] to cartography, satellite imaging, geolocation or tracing systems,[31] and from techniques of remote vision[32] to newer works on extended reality.[33] Recent approaches concentrate on more specific aspects, referring to the mobility,[34] the architectural structure,[35] the territory,[36] the situatedness,[37] or the three-dimensionality[38] of screens and visualisations, to reflect the turn towards the particular role of the image in situating action and perception. Those more current works share the observation that the distinction between the image and its physical context is disappearing. They shift the focus from the internal relations within the image, i.e. its composition, towards the iterative interplay of structures and processes in front and behind the image, e.g. metadata that feeds the augmentation. Beyond their visual boundaries, they show that the effect and appearance of images depends on the situation and the space in which they are employed. Describing the adaptivity of digital imaging processes in more detail and with particular regard to the dissolving distinction between image and space may also shed new light on the older anthropological question of the ‘place of images’ between body and medium.[39] With living bodies literally plugged into the pictorial machinery, such as in military drone operations, the continuous connection to sensor data entails tangible restrictions and risks that are not yet fully comprehended. The relation of image and space raises several methodological questions in regard to the digital image. What kind of narrative visual strategies are employed to connect virtual and physical space, and how do they affect the users’ ability to navigate through the image?

Visual media are continuing to gain importance in decision-making processes as the foundation for human thought and action, and they take on their specific form as a consequence of this. For this very reason, the technical basis and aesthetic results of digital imaging are not to be considered opposites but in a relationship of permanent tension. The conventional opposition of form and production is subverted in adaptive images in a way that is difficult to define. The role of technical infrastructure in image production and circulation, such as software, may have been discussed in a number of contributions,[40] but these have not redefined the status of the image and the aspects of its action, or revised them to reflect new conditions. A concept of the adaptive image calls for the formulation of a rigorously application-based concept of the ‘image’ that recognises the historical evolution, significance, and specificity of visual cultures, and functions alongside their processing and aggregation in the digital sphere.

Regardless of their simulative and immersive character, adaptive images remain constantly linked to external situations, factors and tasks, and while it may be obvious that visual meaning depends on contexts, reception and actions, they represent a denser interweaving of aesthetics and pragmatics. The effects of practices related to adaptive imaging range from psychological or epistemic effects to rather material transformations. In correspondence, further case studies need to systematically describe the phenomenon of ‘adaptivity’ based on various forms of application in order to determine the extended reach of digital images. Through such studies it will be possible to theoretically and methodologically account for the multi-layered impacts of such dynamic, recursive, and interventional entanglements of image, space, and action – namely, adaptive images.

Authors

Dr. Kathrin Friedrich is a media studies postdoc and scientific coordinator of the research group ‘SENSING: The Knowledge of Sensitive Media’ (funded by the Volkswagen Foundation) at the Brandenburg Centre for Media Studies and Potsdam University. She is a member of the research project ‘Adaptive Images. Technology and Aesthetics of Situative Digital Imaging’ as well as co-founder of the Adaptive Imaging group.

Moritz Queisner is a research associate in the project ‘Adaptive Images. Technology and Aesthetics of Situative Digital Imaging’ at Karlsruhe University of Arts and Design and a guest researcher at Charité – Universitätsmedizin Berlin. Moritz has an academic background in media studies and science and technology studies. His academic work investigates imaging and interaction in contemporary media technology. He is the co-founder of the Adaptive Imaging group (www.adaptiveimaging.org), a collective of scholars, designers, and scientists who study image-guided practices in contemporary media technology.

Matthias Bruhn is Professor for Art Studies and Media Theory at Karlsruhe University of Arts and Design. He studied art history and philosophy in Hamburg (Dr. phil. 1997) where he also directed the research department ‘Political Iconography’ at the Warburg Haus (until 2001). After several fellowships and a position as coordinator of the World Heritage Studies programme in Cottbus, he worked as permanent research associate at Humboldt University Berlin and as principal investigator of the Cluster of Excellence ‘Image Knowledge Gestaltung’. His research focuses on scientific as well as political and economic functions of images, the development of visual media, and comparative methods in art history.

Acknowledgements

The authors would like to thank Carmen Westermeier and Lydia Kähny for text editing and the Einstein Foundation Berlin for funding the proofreading by Jacob Watson.

References

Ash, M. Gestalt psychology in German culture, 1890-1967: Holism and the quest for objectivity. New York: Cambridge University Press, 1998 (orig. in 1995).

Andrejevic, M. and Burdon, M. ‘Defining the Sensor Society’, Television & New Media, Vol. 16, 2014: 19-36.

Baudrillard, J. Simulacra and simulation. Michigan: University of Michigan Press, 1995 (orig. in 1981).

Belting, H. ‘Der Ort der Bilder’ in Das Erbe der Bilder. Kunst und moderne Medien in den Kulturen der Welt, edited by H. Belting and L. Haustein. München: C.H. Beck, 1998: 34-53.

Berlant, L. ‘On the Case’, Critical Inquiry, Vol. 33(4), 2007: 663-667.

Bruhn, M. and Hemken, K. (eds). Modernisierung des Sehens. Sehweisen zwischen Künsten

und Medien. Bielefeld: Transcript, 2008.

Crandall, J. ‘The Geospatialization of Calculative Operations. Tracking, Sensing and Megacities’, Theory, Culture & Society, Vol. 27(6), 2010: 68-90.

Crary, J. Techniques of the observer: On vision and modernity in the nineteenth century. Cambridge: MIT Press, 1990.

Damisch, H. The origin of perspective. Cambridge: MIT Press, 1994 (orig. in 1987).

Dinkla, S. Pioniere Interaktiver Kunst von 1970 bis heute. Myron Krueger, Jeffrey Shaw, David Rokeby, Lynn Hershman, Graham Weinbren, Ken Feingold. Karlsruhe-Ostfildern: Cantz Verlag, 1997.

Eder, J. and Klonk, C. Image operations: Visual media and political conflict. Oxford: Oxford University Press, 2017.

Elsaesser, T. ‘Digital Cinema: Delivery, Event, Time’ in Cinema futures: Cain, Abel or cable? The screen arts in the digital age, edited by T. Elsaesser and K. Hoffmann. Amsterdam: Amsterdam University Press, 1998: 201-222.

Farocki, H. ‘Phantom Images’, Public, 2004: 12-124.

Edgerton, S. The Renaissance rediscovery of linear perspective. New York: Basic Books, 1975.

Feiersinger, L., Friedrich, K., and Queisner, M. (eds). Image action space: Situating the screen in visual practice. Berlin-Boston: De Gruyter, 2018.

Feiersinger, L. ‘Spatial Narration. Film Scenography Using Stereoscopic Technology’ in Image action space: Situating the screen in visual practice, edited by L. Feiersinger, K. Friedrich, and M. Queisner. Berlin-Boston: De Gruyter, 2018: 69-78.

Flusser, V. Into the universe of technical images. Minneapolis: University of Minnesota Press, 2011 (orig. in 1992).

Franz, N. and Queisner, M. ‘The Actors Are Leaving the Control Station. The Crisis of Cooperation in Image-guided Drone Warfare’ in Image action space: Situating the screen in visual practice, edited by L. Feiersinger, K. Friedrich, and M. Queisner. Berlin-Boston: De Gruyter, 2018: 115-132.

Freeman, D. et al. ‘Automated psychological therapy using immersive virtual reality for treatment of fear of heights: a single-blind, parallel-group, randomised controlled trial’, Lancet Psychiatry, Vol. 5, 2018: 625-632.

Friedberg, A. The virtual window: From Alberti to Microsoft. Cambridge: MIT Press, 2006.

Friedrich, K. and Diner, S. ‘Virtuelle Chirurgie’ in Handbuch Virtualität, edited by D. Kasprowicz and S. Rieger. Wiesbaden: Springer, 2019: https://doi.org/10.1007/978-3-658-16358-7_19-1.

Gregory, D. ‘From a View to a Kill. Drones and Late Modern War’, Theory, Culture & Society 28, 2012: 188-215.

Gregory, D. ‘The Territory of the Screen’, MediaTropes, Vol. 6, No. 2, 2016: 126-147.

Hansen, M. Feed-forward: On the future of twenty-first-century media. Chicago: University of Chicago Press, 2015.

Hillis, K. Digital sensations: Space, identity, and embodiment in virtual reality. Minneapolis: University Of Minnesota Press, 1999.

Hinterwaldner, I. The systemic image: A new theory of interactive real-time simulations. Cambridge: MIT Press, 2017.

Hoel, A. ‘Operative Images: Inroads to a new paradigm of media theory’ in Image action space: Situating the screen in visual practice, edited by L. Feiersinger, K. Friedrich, and M. Queisner. Berlin: De Gruyter, 2018: 11-27.

Huhtamo, E. ‘Screen Tests: Why Do We Need an Archaeology of the Screen?’, Cinema Journal, Vol. 51, No. 4, 2012: 144-148.

Jones, M. ‘Vanishing Point: Spatial Composition and the Virtual Camera’, Animation: An Interdisciplinary Journal, Vol. 2, No. 3, 2007: 225-243.

Jones, N. Spaces mapped and monstrous: Digital 3D cinema and visual culture. New York: Columbia University Press, 2020.

Kaerlein, T. ‘Aporias of the Touchscreen: On the Promises and Perils of a Ubiquitous Technology’, NECSUS. European Journal of Media Studies, Vol. 2, Autumn 2012: https://necsus-ejms.org/aporias-of-the-touchscreen-onthe-promises-and-perils-of-a-ubiquitous-technology/ (accessed on 26 July 2020).

North, M. and North, S. ‘Virtual Reality Therapy’, Encyclopedia of Psychotherapy, Vol. 2, 2002: 889-893.

Madary, M. and Metzinger, T. ‘Real Virtuality. A Code of Ethical Conduct. Recommendations for Good Scientific Practice and the Consumers of VR-technology’, Frontiers in Robotics and AI, February 2016: http://journal.frontiersin.org/article/10.3389/frobt.2016.00003/full (accessed on 26 July 2020).

Monteiro, S. ‘Fit to Frame: image and edge in contemporary interfaces’, Screen, Vol. 55, No. 3, 2014: 360-378.

Parks, L. ‘Orbital Viewing: Satellite Technologies and Cultural Practice’, Convergence ,Vol. 6, No. 10, 2000: https://doi.org/10.1177/135485650000600402.

Pennington, A. ‘Behind the scenes: The Mandalorian’s groundbreaking virtual production’, IBC365, 4 March 2020: https://www.ibc.org/trends/behind-the-scenes-the-mandalorians-groundbreaking-virtual-production/5542.article (accessed on 26 July 2020).

Queisner, M. ‘Medical Screen Operations: How Head-Mounted Displays Transform Action and Perception in Surgical Practice’, Media Tropes, Vol. 6, No. 1, 2016: 30-51.

_____. ‘Disrupting Screen-Based Interaction. Design Principles of Mixed Reality Displays’ in Mixed Reality, edited by C. Busch, C. Kassung, and J. Sieck. Glückstadt: Hülsbusch, 2017: 133-144.

Reichert, R., Richterich, A., Abend, P., Fuchs, M. and Wenz, K. (eds), Mobile Digital Practices, Digital Culture & Society, Vol. 3, No. 2, 3 January 2017.

Rogers, S. ‘Virtual Production And The Future Of Filmmaking – An Interview with Ben Grossmann, Magnopus’, Forbes Magazine, 29 January 2020: https://www.forbes.com/sites/solrogers/2020/01/29/virtual-production-and-the-future-of-filmmakingan-interview-with-ben-grossman-magnopus/ (accessed on 26 July 2020).

Ross, M. ‘Virtual Reality’s New Synesthetic Possibilities’, Television & New Media, Vol. 21, No. 3, 2020: 297-314.

Sauer, I., Queisner, M., Tang, P., Moosburner, S., Hoepfner, O., Horner, R., Lohmann, R., Pratschke, J. ‘Mixed Reality in visceral surgery – Development of a suitable workflow and evaluation of intraoperative use-cases’, Annals of Surgery, Vol. 266, No. 5, 1 August 2017: https://doi.org/10.1097/SLA.0000000000002448.

Schröter, J. 3D. history, theory and aesthetics of the transplane image. New York: Bloomsbury, 2013.

_____. ‘Viewing Zone. The Volumetric Image, Spatial Knowledge and Collaborative Practice’ in Image action space: Situating the screen in visual practice, edited by L. Feiersinger, K. Friedrich, and M. Queisner. Berlin: de Gruyter, 2018: 147-158; https://doi.org/10.1515/9783110464979-012.

Sherman, W. and Craig, A. Understanding virtual reality: Interface, application, and design. San Francisco: Morgan Kaufmann, 2003.

Siegert, B. ‘(Nicht) Am Ort. Zum Raster als Kulturtechnik’, Thesis, Vol. 3, 2003: 93-104.

Strauven, W. ‘The Archaeology of the Touchscreen’, Maske und Kothurn, Vol. 58, No. 4, 2014.

Suchman, L. ‘Situational Awareness: Deadly Bioconvergence at the Boundaries of Bodies and Machines’, Media Tropes, Vol. 5, No. 1, 2015: 1-24.

Sutherland, I. ‘The Ultimate display’ in Information processing: Proceedings of the International Federation for Information Processing Congress, New York City, May 24 – 29, 1965, Washington, D.C.: Spartan Books, 1965.

Sæther, S. and Bull, S. (eds). Screen space reconfigured. Amsterdam: Amsterdam University Press, 2020.

Thielmann, T. ‘Mobile Medien’ in Handbuch Medienwissenschaft, edited by J. Schröter. Stuttgart: Metzler, 2014: 350-359.

Verhoeff, N. Mobile screens: The visual regime of navigation. Amsterdam: University of Amsterdam, 2012.

Virilio, P. The aesthetics of disappearance. New York: Semiotext(e), 1991.

Whissel, K. ‘Digital 3D, Parallax Effects, and the Construction of Film Space in Tangled 3D and Cave of Forgotten Dreams 3D’ in Screen space reconfigurated, edited by S. Sæther and T. Bull. Amsterdam: Amsterdam University Press, 2020: 77-103.

[1] Andrejevic & Burdon 2014; Crandall 2010; Hansen 2015.

[2] Friedrich & Diner 2019; Queisner 2016.

[3] Franz & Queisner 2018.

[4] Berlant 2007, p. 663.

[5] Jones 2020, p. 63 ff.; Ross 2020.

[6] Rogers 2020.

[7] Pennington 2020.

[8] Ibid.

[9] Whissel 2020.

[10] Feiersinger 2018; Jones 2007.

[11] North & North 2002.

[12] Freeman et al. 2018.

[13] Hillis 1999, pp. 1-29; Sherman & Craig 2003, pp. 6-17.

[14] Friedrich 2018.

[15] Madary & Metzinger 2016.

[16] Sauer et al. 2017.

[17] Ash 1995; Damisch 1987; Edgerton 1975; Friedberg 2006; Siegert 2003.

[18] e.g. Cartwright 1997.

[19] Hinterwaldner 2017.

[20] Dinkla 1997.

[21] Andersen & Pold 2011; Hadler & Haupt 2016.

[22] Crary 1990; Bruhn & Hemken 2008.

[23] Baudrillard 1995; Flusser 1992.

[24] Feiersinger & Friedrich & Queisner 2018; Verhoeff 2012.

[25] Kaerlein 2012; Monteiro 2014; Reichert et al. 2017; Sæther & Bull 2020; Strauven 2014.

[26] Franz & Queisner 2018; Suchman 2015.

[27] Farocki 2004, p. 17.

[28] Eder & Klonk 2017; Hinterwaldner 2013; Hoel 2018.

[29] Flusser 1992; Virilio 1991.

[30] Sutherland 1965.

[31] Parks 2000.

[32] Franz & Queisner 2018; Vertesi 2009.

[33] Queisner 2017.

[34] Verhoeff 2012; Thielmann 2014.

[35] Elsaesser 1998.

[36] Gregory 2016.

[37] Suchmann 2015.

[38] Schröter 2014, 2018.

[39] Belting 1999.

[40] Berry 2011; Chun 2005; Manovich 2001.